Effortless Creation

Building projects is straightforward. Create multiple independent projects to organize your work, defining scope and boundaries in just a few simple steps.

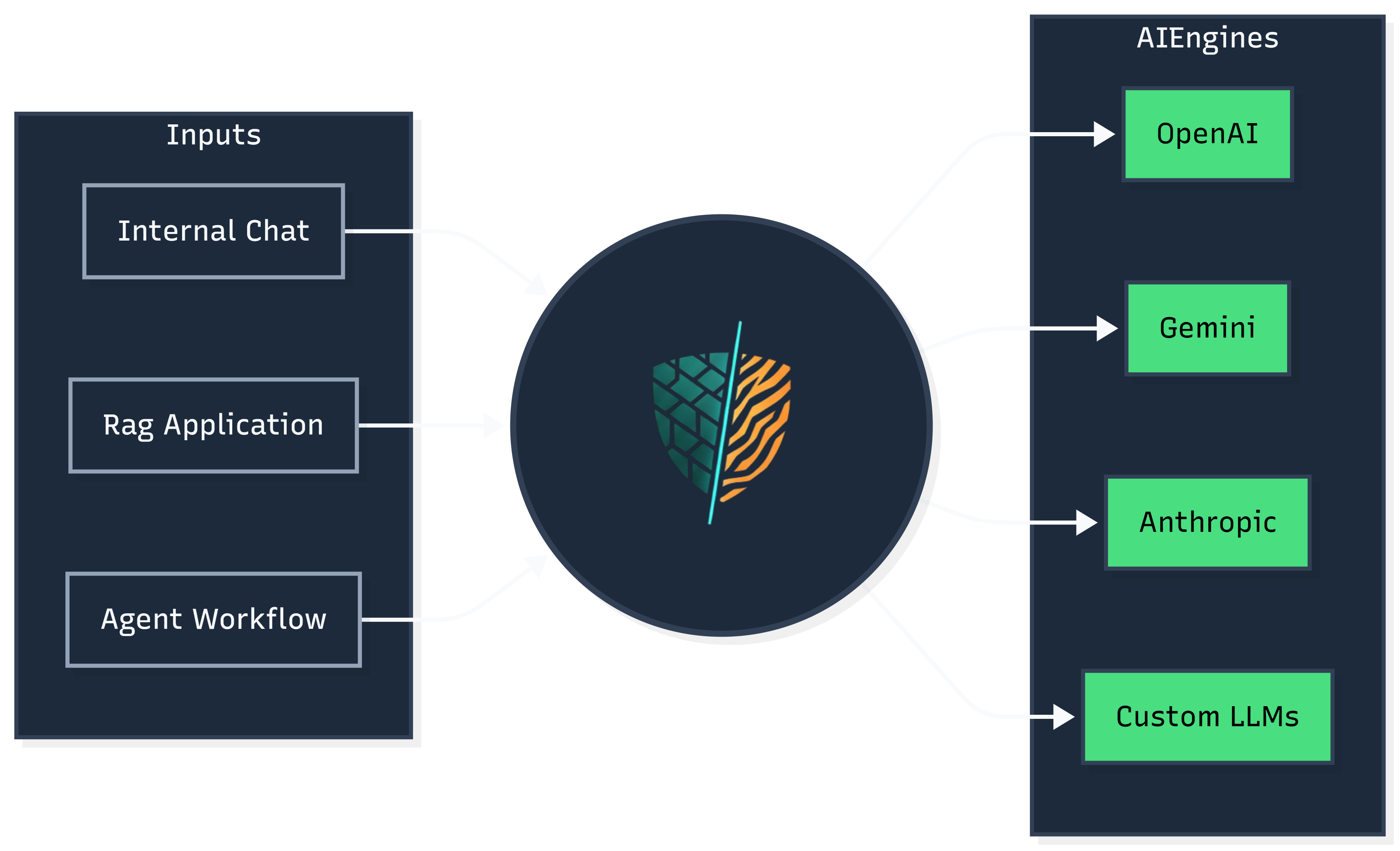

Add an intelligent security layer to your AI models. CrocoTiger validates prompts in real time, prevents prompt injection, and ensures strict contextual compliance.

Designed for reliability, control, and continuous improvement.

Blocking performance across common AI attack patterns with an average accuracy of 99.36% and an average response time of 0.49ms per query.

Everything you need to secure your AI infrastructure.

Blocks malicious, irrelevant, or manipulated prompts before they reach your model.

Define valid topics using a theme, website content, or your own files.

Ensures every prompt matches the allowed context and subject matter.

Discover how easy it is to secure your AI applications.

Building projects is straightforward. Create multiple independent projects to organize your work, defining scope and boundaries in just a few simple steps.

Verify your setup immediately. Ask questions to your newly created project to ensure it behaves exactly as expected.

Start protecting your LLMs with CrocoTiger.

Get Started